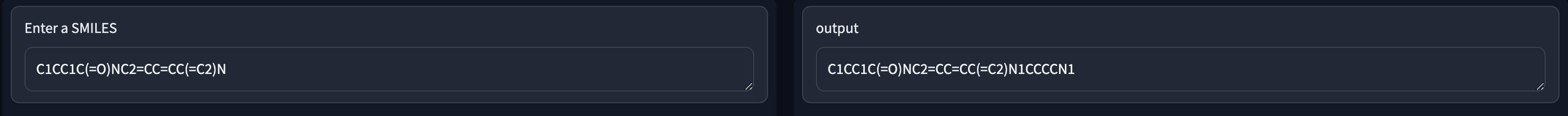

To learn about transformers and how to build one, I made a CGPT (Chemical Generative Pretrained Transformer) only using PyTorch and a tokenizer library. Similar to GPT-3, CGPT is trained on lots of sequences to complete the sequence. In this case, the sequence is SMILES (a format that represents molecular structure) instead of english.

It seems to have learned the number of bonds each atom usually makes, and common sub-sequences of atoms in molecules.

I only trained for 5 epochs (2 days on my laptop with a 3090) with the loss still going down so the model is probably undertrained for any practical use.

David Yang helped me format the SMILES data downloaded from PubChem.

I’m grateful for Umar Jamil for creating an awesome video on transformers that helped tremendously!