Results#

Making Videomimic Mimic Hands#

Videomimc takes a casual video (such as one from a smartphone) of a human doing some action, recreates the whole scene and human motion in a simulation, trains a robot policy to mimic the human motion, then distills it onto a real robot for deployment.

Videomimic does not include hand motion or facial expression mimicry. I was fortunate enough to work on a mini project to extend the videomimic paper to incorporate hands.

Here is a demo of Videomimic with VS without the hand extension I made.

Intial input#

BEFORE (no hand mimic)#

AFTER (with hand mimic)#

You can see the hands go from always open to a bit closed to mirror the video more closely.

The reason why the hands are much more open then they should be is because when the hand pose detection fails on some of the frames (perhaps by occlusion) the default hand pose becomes the fully open hand for that frame. The temporal blending then blends the closed and open hands from different timesteps to create a semi-open hand.

The videomimic pipeline can then create a simulation from this to perform RL and deploy on a real robot. Perhaps in the future my extension will be used to manipulate hands in addition to locomotion:

checkout the videomimic website for more info.

Viser#

If you are wondering what the 3D visualizer is, it is called viser. I made an interactable one below that is a recording of optimizing some balls to go from the start position (blue) to a target (green) while avoiding the black ball that I am moving around with a mouse.

Try dragging your mouse below!

It is a cool and visual project to try if you want to get familiar with jax and viser.

How I added hands#

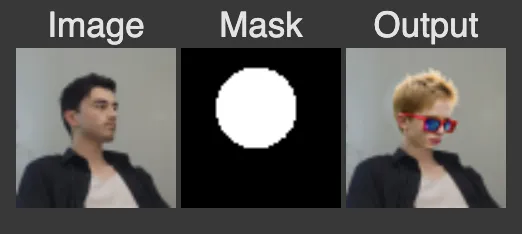

- Use WiLoR to get MANO hand pose estimations for every frame processed independently. This is what the hand detections look like:

- Create a SMPL body model for each frame from running VIMO (processed as a video)

- Create a SMPLX body + hands + jaw model for each frame by combining the mano and SMPL files. (Set the jaw pose as the identity for now)

- Modify the Hunter (jax) optimization that optimizes the human poses and scene reconstruction to use a SMPLX file instead of SMPL

- Modify the visualization code (uses viser) to render a SMPLX model, and take in the SMPLX file saved from step 4

This is not specific to the hand extension, but to segment out which pixels are foreground vs background and to estimate where the joints should be, VITPose and Sam2 are run. Here are the outputs of each for our video.

These are used in the joint optimization step to fit the human joint positions, angles, and also adjust the principal components of the shape of the human (such as how fat the person is).

Shortcomings and future work#

- When WiLoR fails to detect hands, the identity pose which is the fully extended hand pose is assumed. This combined with the temporal consistency cost in the optimization leads to the hands opening up when it should remain closed as seen in the video at the top of the post. Perhaps supporting missing hand detections and interpolating between neighbor valid hand poses is a good fix.

- Changing the default hand pose to the natural default pose insted of the fully open pose could be a better prior for the hand optimization.

- Foot contact (BSTRO) is not implemented yet for SMPLX.

Unfortunately I was asked to not share this code as videomimic code is not released yet, so I am not able to provide a repository for this.