Can computers get depth information from a 2D picture?

As humans, we can make a very good guess on the depth of the pixels in a single photograph. This is most likely a combination of context clues (vanishing lines, partial occlusions, etc…) and past experience (a chair is usually a certain size).

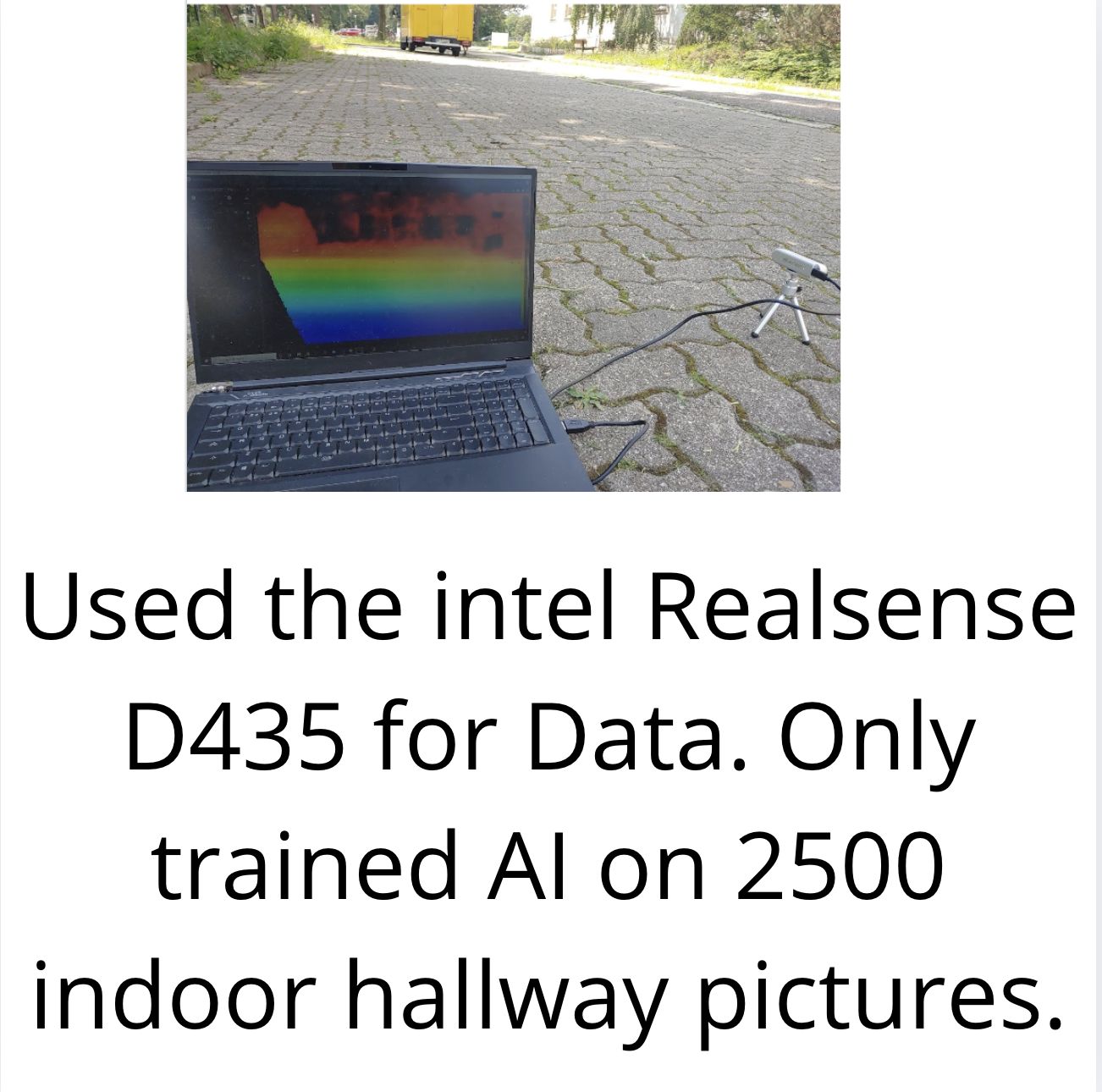

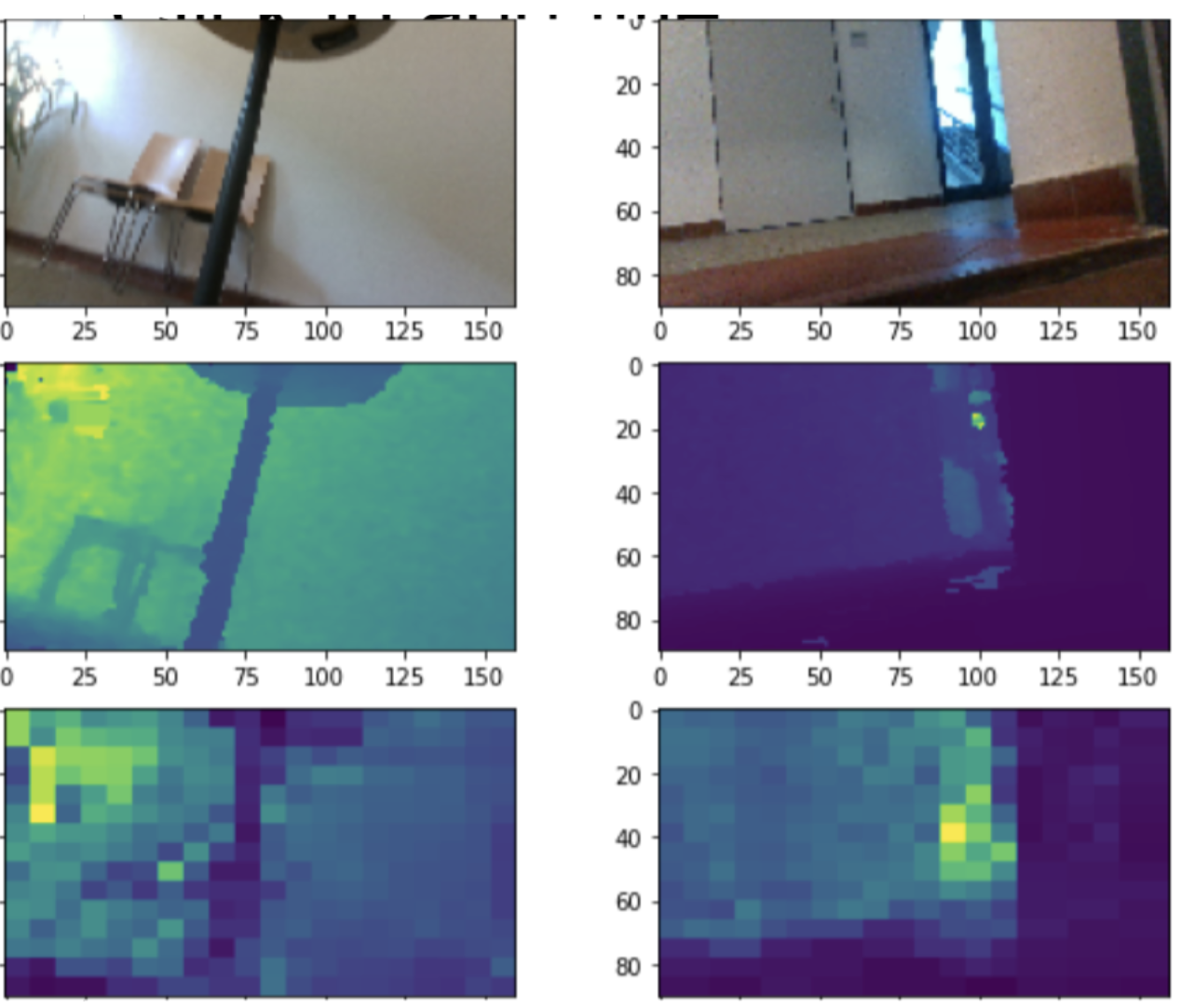

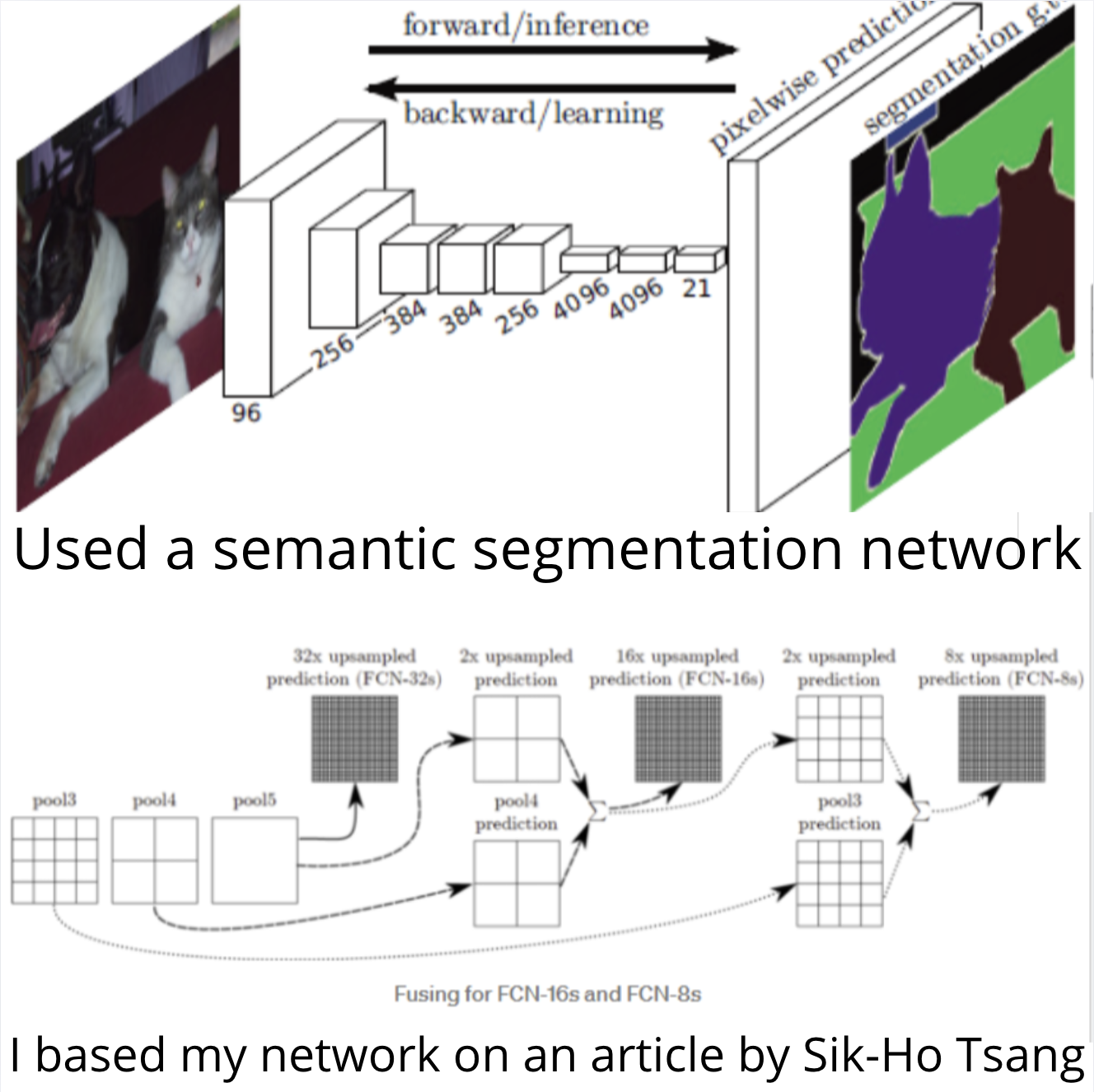

To answer this question I built a machine learning model (a U-Net) to predict the depth image from an rgb image. The training data I got by buying an intel-realsense D435 (a stereo camera than outputs depth and rgb) and recording a bunch of frames around the building.

In the demo, I decided to use a raspberry pi to control a model car to avoid obstacles while going forward using the depth information from the model.

Raspberry pis are very slow at machine learning, so I had to lower the resolution and make the car stop at every input frame, but it works!